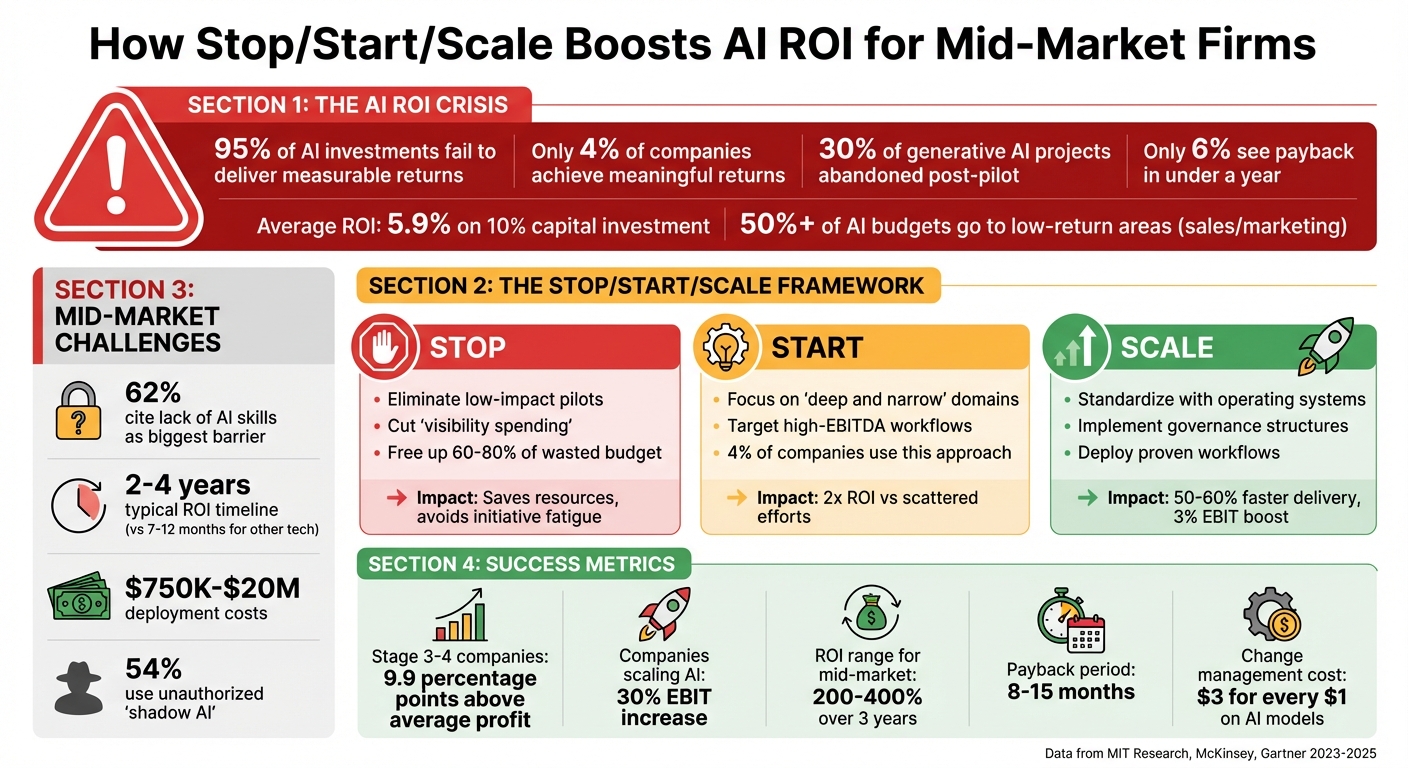

Mid-market companies face a tough reality: 95% of AI investments fail to deliver measurable returns. Despite billions spent, most firms struggle to see results because of misaligned tools, poor workflows, and overly long ROI timelines. The solution? The Stop/Start/Scale framework, a strategy to cut wasteful projects, focus on impactful initiatives, and expand proven workflows. Here's the breakdown:

- Stop: Eliminate low-impact AI projects stuck in pilot stages or failing to deliver financial results.

- Start: Prioritize targeted, high-value projects that solve specific business problems.

- Scale: Expand successful initiatives with standardized systems and clear governance.

Key stats:

- Only 6% of companies see AI payback in under a year.

- Over 50% of AI budgets go to low-return areas like sales and marketing.

- 30% of generative AI projects are abandoned post-pilot due to unclear value.

AI ROI Statistics and Stop/Start/Scale Framework Impact for Mid-Market Companies

Why AI Investments Fail to Deliver Returns

The AI ROI Gap in Numbers

Enterprise AI projects often fall short of expectations, yielding an average ROI of just 5.9% on a 10% capital investment. Even more striking, only 4% of companies manage to achieve meaningful returns. Adding to the challenge, projections suggest that at least 30% of generative AI projects will be abandoned after the proof-of-concept stage by the end of 2025.

The issue isn’t the technology itself but how it’s being deployed. Over 50% of AI budgets are funneled into sales and marketing tools, even though the most substantial ROI comes from back-office automation.

"The 95% failure rate for enterprise AI solutions represents the clearest manifestation of the GenAI Divide." - Aditya Challapally, Lead Author and MIT Researcher

Another key obstacle is the "learning gap." Tools like ChatGPT are highly adaptable for personal use but fall short in enterprise settings. These tools often fail to align with specific organizational workflows. This disconnect leads to fragmented systems across departments, a lack of integration, and data scattered in silos. For example, if a data team needs over a week just to compile a dataset for an AI project, it’s a clear sign that the infrastructure isn’t ready for scaling.

These shortcomings explain why mid-market firms face even greater challenges when it comes to AI investments.

Why Mid-Market Firms Are at Higher Risk

The challenges of generating ROI from AI hit mid-market firms particularly hard. Unlike large enterprises, these firms face unique constraints that amplify the risks.

One major hurdle is talent density. A staggering 62% of executives identify the lack of AI skills as the biggest barrier to delivering value. While Fortune 500 companies can attract top-tier AI talent, mid-market firms often struggle to bring in external experts or provide adequate training for their existing teams.

The financial strain is another significant challenge. AI investments typically require 2 to 4 years to deliver returns - far longer than the 7 to 12 months expected for typical technology investments. Only 6% of organizations report an AI payback period of less than a year. For mid-market firms operating with tighter budgets, this extended timeline can create serious cash flow pressures. To put it into perspective, deploying production-level AI can cost anywhere from $750,000 for basic Retrieval Augmented Generation (RAG) applications to $20 million for custom Large Language Models tailored to specific industries.

Siloed decision-making further complicates matters. When IT, data teams, and business units operate independently, progress grinds to a halt. Poor governance often leads to "shadow AI", where 54% of employees use unauthorized AI tools without formal approval. This results in fragmented innovation, duplicated efforts, and mounting technical debt. To overcome these issues, companies need a clear strategy to terminate low-value projects and reallocate resources effectively.

Firms in the early stages of AI adoption (Stages 1 and 2) often experience financial performance below the industry average. Substantial ROI only becomes achievable at Stage 3, where organizations implement scalable AI systems and workflows. Unfortunately, reaching this level requires precisely the resources - time, budget, and talent - that mid-market firms often lack.

How the Stop/Start/Scale Framework Works

What Stop/Start/Scale Means

The Stop/Start/Scale framework is a three-step approach designed to turn AI investments into measurable outcomes by guiding where to invest - and where to cut back.

Stop focuses on cutting underperforming projects. Studies show that between 70% and 85% of generative AI initiatives never make it to production, wasting valuable budgets. These efforts often consume resources without delivering meaningful results. By halting these high-complexity, low-return projects, companies can redirect their focus toward more impactful areas.

Start emphasizes launching high-value, targeted initiatives. Instead of spreading resources thin across multiple use cases, organizations concentrate on a specific domain or process. For example, in late 2023, Reckitt, a consumer packaged goods company, zeroed in on marketing. By automating 100 targeted tasks, they sped up product concept development by 60% and improved marketing communication efficiency by 30%.

Scale involves expanding successful projects through structured systems. This step requires an "operating system" for AI, which includes clear roles, responsibilities, rituals, and resource tracking. In early 2024, a medium-sized company within a Latin American conglomerate adopted this framework. They boosted the share of customer interactions handled by generative AI chatbots from 3% to 60% in just six months, while increasing model accuracy from 92% to 97%.

"AI initiatives fail less because models are weak and more because organizations are unprepared. The 5Rs turn scaling into a managed process." - Ayelet Israeli and Eva Ascarza

Interestingly, only 4% of companies focus deeply on a few priorities for transformation. Yet, these companies achieve double the ROI over time compared to those with scattered efforts.

| Framework Component | Action | Mid-Market Impact |

|---|---|---|

| Stop | Cut low-impact pilots and "visibility spending" | Saves resources and avoids burnout from scattered initiatives |

| Start | Focus on "deep and narrow" domain reinvention | Builds competitive advantages that are hard to replicate |

| Scale | Implement standardized systems (Roles, Rituals, etc.) | Cuts delivery time by 50–60% and strengthens governance |

This method is particularly effective for mid-market firms, enabling them to overcome resource constraints and achieve strong returns on investment.

Why This Framework Fits Mid-Market Firms

This framework is tailored to address the specific challenges faced by mid-market companies, which often operate with tighter budgets, smaller teams, and less room for error compared to Fortune 500 giants.

The Stop phase helps these firms conserve resources by eliminating low-impact projects that drain budgets and cause "initiative fatigue." By clearing out these distractions, companies can focus their efforts on areas where they already have strengths.

The Start phase allows mid-market firms to rethink workflows within a single domain, avoiding the pitfalls of spreading talent too thin. This approach fosters unique processes that competitors can't easily copy. A great example is L'Oréal, which concentrated its generative AI efforts on the consumer journey. In 2024, their "Beauty Genius" chatbot conducted over 400,000 personalized consultations in its first six months in the U.S., doubling conversion rates in regions like Southeast Asia and the Middle East.

Finally, the Scale phase standardizes AI operations, cutting project delivery times by an estimated 50% to 60%. For mid-market firms with limited data science resources, this efficiency is a game-changer. By focusing on back-office AI applications - like coding automation or resource allocation - companies can achieve consistent returns without wasting money on flashy but low-impact initiatives.

Making Stop Decisions: Cutting Low-ROI Initiatives

How to Identify Initiatives to Stop

The first step in cutting low-ROI initiatives is understanding the difference between activity and impact. Many mid-market companies track metrics that don’t actually influence EBIT, costs, or revenue. If an initiative delivers impressive technical results but doesn’t move the needle on the profit-and-loss statement, it’s time to pull the plug.

Another red flag? Projects stuck in the proof-of-concept stage. These can drain resources, often consuming 60%–80% of budgets without delivering meaningful outcomes.

Low adoption is another clear indicator of failure. If frontline staff or middle managers aren’t using a tool, it’s not creating the intended value. In fact, 44% of employees who avoid using AI tools say they don’t see how these tools can help with their specific responsibilities.

A practical way to evaluate initiatives is by using a Value-to-Complexity Matrix. This tool helps map out projects based on their projected EBITDA impact versus how complex they are to implement. High-complexity, low-impact projects - often called “vanity efforts” - should be eliminated. Additionally, a one-week "Bottleneck Blitz" can uncover workflow obstacles and rank them by their EBITDA impact.

Be wary of "visibility spending" - initiatives designed more for publicity than operational value. Projects like back-office AI for coding automation or resource allocation tend to deliver measurable returns, unlike these flashy but ineffective efforts.

By relying on clear, data-driven criteria, businesses can sidestep internal biases and make the tough calls to end projects that don’t deliver value.

Overcoming Resistance to Stopping

Identifying low-value initiatives is just the first hurdle. The real challenge is overcoming the internal resistance to shutting them down. It’s often harder to stop a failing project than to start a new one. One big reason? The sunk cost fallacy. Teams justify continuing projects based on the money already spent, rather than focusing on their future potential.

To combat this mindset, it’s helpful to shift the narrative. Instead of treating AI as an experimental playground, start viewing it as revenue-critical infrastructure. This shift changes the stakes: failure now has financial consequences. Projects need to prove they can launch, stay operational, and eventually pay for themselves.

"Proofs-of-concepts feel safe, yet they're where budgets die. When you frame an initiative as an 'experiment,' you accept that failure has no financial consequence."

– Superhuman Team

This quote highlights the importance of treating AI initiatives with a business-first mindset.

Defining clear metrics that link adoption to business impact - like EBITDA gains or churn reduction - can prevent teams from focusing on flashy demos with no measurable outcomes. Accountability is also key. Assigning a project sponsor to oversee an initiative from approval to measurable results ensures someone is responsible for delivering ROI. When sponsors know they’ll be held accountable, they’re more likely to support early decisions to stop underperforming projects.

Leadership support is crucial too. Regular executive meetings, such as biweekly committee reviews, help keep the focus on value. These meetings empower teams to address blockers or shut down failing initiatives without getting bogged down by internal politics or departmental inertia.

For a structured approach, consider focused sprints designed to drive clear decisions. Take HRbrain’s 5-day ROI Reset Sprint, for example. This process audits all AI spending and initiatives, categorizing them into Stop/Start/Scale decisions, and delivers a 30-day action plan with clear ownership and KPIs. By eliminating ambiguity, this framework helps align stakeholders and ensures tough decisions are made with confidence - an essential part of the broader Stop/Start/Scale strategy for achieving measurable ROI.

Starting High-Impact AI Workflows

What Makes an AI Initiative High-Impact

High-impact AI initiatives are those that create lasting advantages, making it tough for competitors to catch up. To achieve this, it’s crucial to avoid spreading resources thin across unrelated projects. Instead, successful mid-market companies adopt a "deep and narrow" approach - focusing intensely on one interconnected domain. This strategy has been shown to deliver twice the ROI compared to broader efforts. Yet, surprisingly, only 4% of companies currently follow this focused method.

Take Reckitt, for example. In late 2023, the company honed its AI efforts entirely on targeted marketing rather than dispersing resources across areas like procurement or HR. Their success came from treating AI as a critical revenue-driving tool - something that needed to "pay for itself" - rather than an experimental side project.

When launching new AI projects, tools like the Value-to-Complexity Matrix can help evaluate if you’re ready to move forward. This method scores potential initiatives based on their expected EBITDA impact alongside your current data readiness and infrastructure. Projects with clear, measurable KPIs - like incremental revenue, cost per transaction, or model accuracy - should take priority. For instance, between 2024 and 2025, Guardian Life Insurance Company of America automated its request for proposal (RFP) and quoting process, cutting proposal generation time from 5–7 days to just 24 hours.

To achieve these kinds of results, rethinking workflows is a must.

Redesigning Workflows Before Implementation

For AI initiatives to succeed, fixing flawed workflows is just as important as choosing the right technology. Starting an AI project without addressing underlying process issues is like painting over a cracked wall - it might look good temporarily, but the cracks will show again. Unfortunately, 59% of organizations take a technology-first approach, treating AI as an add-on rather than redesigning processes. This is likely why only 6% see a return on their AI investments within a year.

Before diving into AI implementation, consider running a one-week "Bottleneck Blitz" to uncover and rank obstacles like manual approvals, data silos, or outdated policies based on their EBITDA impact. By rethinking workflows - not just automating them - you set the stage for AI to deliver real, measurable results.

A great example is Italgas Group. In 2024, before rolling out "WorkOnSite", a predictive AI tool for remote construction site management, the company completely redesigned its inspection workflow. This overhaul led to a 40% faster project completion rate and an 80% reduction in physical inspections, all while enhancing safety. They didn’t just slap AI onto an old process - they rebuilt the process to take full advantage of what AI could do.

"You have to start with the value and you have to start with the use case. AI is not a blunt instrument that should be applied everywhere."

– Andy McKinney, CEO, Able

The focus should be on augmentation, not mere automation. AI workflows should amplify human contributions rather than replace them. Research shows that 74% of employees who survive layoffs tied to "efficiency" AI report a drop in their own productivity. Instead of pushing employees to work faster under the same constraints, design workflows that free up time for strategic thinking and genuinely increase capacity.

One approach to this is HRbrain’s 3-week Workflow Transformation Sprint, priced between $18,000–$28,000. This program redesigns two workflows from start to finish, providing detailed implementation plans, playbooks, controls, and adoption strategies. By starting with reimagined processes, AI projects can deliver real results - not just technology for the sake of it.

Scaling Success: Expanding Proven Workflows

Subscription vs. Infrastructure: Cost Comparison

Once an AI workflow has demonstrated its value, the next step is figuring out how to scale it. For mid-market companies, this often boils down to a key decision: should they rely on subscription-based tools that charge per user or query, or should they invest in a more robust infrastructure that integrates AI models with internal systems and proprietary data?

Subscription models are appealing for their low upfront costs and quick deployment. They’re great for achieving fast results, but they come with limitations in terms of control and customization. On the other hand, building an integrated infrastructure requires a significant initial investment in areas like data engineering, API gateways, and change management. However, this approach offers long-term advantages by providing greater control and the ability to tailor solutions to specific needs. According to McKinsey, for every $1 spent on developing AI models, companies typically need to allocate $3 toward change management.

Here’s a quick look at how these two approaches stack up:

| Feature | Subscription Model | Integrated Infrastructure |

|---|---|---|

| Upfront Cost | Low; pay-per-seat or per-query | High; requires data engineering and integration |

| Long-term ROI | Limited; depends on vendor updates | High; builds proprietary competitive advantage |

| Customization | Low; off-the-shelf capabilities | High; connects AI to internal apps and data |

| Maintenance | Managed by the vendor | Requires internal MLOps and data pipelines |

| Speed to Market | Very fast; immediate access | Moderate; requires engineering but enables reuse |

While subscription tools offer a low barrier to entry, they often fall short when it comes to flexibility and scalability. Integrated infrastructure, though more expensive upfront, delivers reusable assets that can significantly improve development speed - by as much as 30% to 50%. Interestingly, the AI models themselves account for only about 15% of the total cost of a generative AI project. Subscription costs, meanwhile, can balloon as usage grows, whereas infrastructure investments unlock long-term efficiencies.

Understanding these cost dynamics is crucial for scaling AI in a way that avoids unnecessary complexity or expense.

How to Scale Without Losing Control

Scaling AI effectively requires more than just managing costs - it demands standardization to avoid chaos. Allowing different departments to choose their own tools can lead to a fragmented setup with multiple interfaces and orchestration layers, making it nearly impossible to maintain efficiency or track results. To avoid this pitfall, adopt a "one of everything" approach: one chat interface, one orchestration layer, and one vector store.

A great example of controlled scaling comes from Vanguard Group. Between 2024 and 2025, the asset management firm estimated nearly $500 million in AI-driven ROI. How? They focused on deploying AI agents in call centers to speed up issue resolution and implemented AI-assisted code generation, which boosted programming productivity by 25% and cut the system development life cycle by 15%. Vanguard succeeded by standardizing its tools and expanding only the workflows that showed measurable results.

To maintain control as you scale, consider these strategies:

- Centralized API Gateway: This acts as a secure interface for authenticating users, ensuring compliance, logging requests for billing, and routing queries to the most cost-effective models. It also prevents vendor lock-in and provides visibility into usage costs across teams.

- 90-Day Scale Rule: Scale an AI initiative only when its KPIs outperform a control group for three straight weeks. If it doesn’t meet this benchmark, either retire it or rework it.

- Tiered Model Strategy: Use smaller, fine-tuned models for routine, high-volume tasks, and reserve larger, more expensive models for complex, high-impact use cases.

Finally, scaling AI isn’t just about technology. Companies that make over 75% of their technology and data accessible organization-wide are 40% more likely to scale AI use cases successfully. Breaking down silos, creating reusable components, and ensuring departments can access the workflows and data they need are key steps toward sustainable growth. By focusing on these principles, organizations can scale AI in a way that’s both efficient and impactful.

sbb-itb-34a8e9f

Governance and Accountability for Stop/Start/Scale

Building Governance Structures That Work

Governance acts as the bridge between strategic AI initiatives and measurable outcomes. Without it, decisions about what to stop, start, or scale can falter. For mid-sized companies, the challenge lies in finding a balance - systems need to be streamlined enough to avoid unnecessary red tape but structured enough to maintain oversight. The secret? Integrating transparent AI governance from the very beginning rather than tacking it on as an afterthought [6, 43].

One approach that has shown promise is the two-track review board system. Guardian Life Insurance Company of America adopted this model between 2024 and 2025 under CEO Andrew McMahon. They created two separate boards: one for high-risk projects requiring detailed scrutiny and another for lower-risk initiatives needing quicker decisions. Each board included experts in technical risk, data privacy, and cybersecurity. This setup enabled a pilot program that slashed their RFP and quoting process from seven days to just 24 hours. Plans are already in place to scale this success further by 2026 [6, 11].

Another example comes from Italgas Group, Europe’s largest natural gas distributor. They established a "Digital Factory", bringing together 18 cross-functional teams to develop minimum viable products in four-month sprints. Each team had a C-level sponsor to ensure alignment with broader company goals. Governance was led by an AI Director who reported to both the Chief People, Innovation, and Transformation Officer and the CIO. This setup not only kept projects on track but also delivered tangible results, such as their "WorkOnSite" AI solution, which generated $3 million in revenue in 2024 [6, 11].

"Now is the time for executive teams to align, commit, and lead the charge toward enterprise-scale AI by developing a playbook for strategy, systems, synchronization, and stewardship."

- Stephanie L. Woerner, Director and Principal Research Scientist, MIT CISR

These governance models illustrate how thoughtful structures can drive accountability and success in AI projects.

Assigning Ownership and Tracking Progress

Once governance is in place, the next step is assigning clear ownership. Without it, even the best governance structure can fail to deliver results. The embedded ops model is one way to ensure accountability. This approach places AI specialists directly within business units, while a lean Center of Excellence oversees the broader strategy. Assigning a single executive to own risk management further simplifies accountability [9, 43].

To prioritize projects effectively, companies can use a value-to-complexity matrix. This tool scores initiatives based on their potential EBITDA impact and the difficulty of deployment, making it easier to decide which projects to stop, start, or scale.

Tracking progress requires discipline. Weekly OKR reviews can help align AI efforts with broader business goals. Vanguard Group provides a great example of this practice. By rigorously monitoring model performance and usage, they estimate $500 million in AI-driven ROI. To ensure widespread adoption, they launched the "Vanguard AI Academy", where half of their employees completed training. This approach distributed AI ownership across the workforce rather than confining it to IT.

Another effective method is using a 90-day execution playbook. This plan breaks deployments into three 30-day phases: identifying blockers in the first month, launching embedded ops in the second, and demonstrating financial impact in the third. This structured timeline ensures AI initiatives maintain momentum and deliver measurable results.

Measuring Success: KPIs for Stop/Start/Scale

Key Metrics to Track

Tracking the right metrics is what separates AI projects that drive real value from those that drain resources. Mid-market companies need a balanced approach, focusing on efficiency, productivity, adoption, quality, and financial returns.

Start with efficiency metrics that turn time savings into measurable outcomes. Forget vague claims like "improved workflows" - instead, aim for specifics like "25 extra proposals generated monthly" or "cycle time cut by 20%". For instance, in 2024, Nestlé swapped out paper-based expense processes for AI-powered tools in SAP Concur. The result? A 100% elimination of manual expense management and a threefold boost in employee efficiency for report creation.

When it comes to financial metrics, CFOs need to see the numbers. Focus on Net Present Value (NPV) to assess value beyond capital costs, Internal Rate of Return (IRR) to measure performance against benchmarks, and Payback Period to understand how quickly returns are realized. For mid-sized companies with development teams of 200 to 1,000, AI investments typically range from $500,000 to $2 million, delivering a 200–400% ROI over three years and payback periods of just 8 to 15 months.

Adoption and quality metrics are equally important to gauge whether your AI tools are being used effectively. Keep an eye on Monthly Active Users (MAU), retention rates (how many users keep coming back), and account-level penetration. You can also track issues like "Unsupported Requests" (where the AI falls short) and "Rage Prompting" (user frustration) to decide whether to refine or halt a project. Atera’s integration of Azure OpenAI in 2025 is a great example - technicians boosted their output from 7 to 70 cases daily, a 10× productivity increase.

"The lack of measurable return often isn't due to a lack of value, but rather the difficulty of measuring that value or return on investment (ROI)." - You.com Enterprise Guide

Before making decisions, collect 8–12 weeks of baseline data on processing times, error rates, customer satisfaction, and revenue per transaction. Without this foundational data, it’s tough to assess whether your Stop/Start/Scale choices are delivering results.

These metrics directly guide decisions on which AI projects to stop, refine, or scale. With a solid baseline in place, tracking ROI over time becomes critical for understanding the long-term impact of your AI initiatives.

Tracking ROI Over Time

ROI doesn’t happen overnight - it evolves as you move through pilot programs, scaling efforts, and long-term implementation. Consistent tracking across these phases ensures AI benefits are realized and amplified.

Most companies see meaningful ROI from AI within 2 to 4 years, which is notably longer than the 7 to 12 months typically expected for other tech investments. This extended timeline highlights the need for ongoing measurement. ROI tracking should follow three phases: formulating hypotheses with business leaders, testing and building a business case, and scaling the solution.

Take PayPal, for example. In November 2023, the company introduced transformer-based deep learning for fraud detection. By analyzing over 200 petabytes of data, PayPal cut fraud-related losses by 11% and nearly halved its loss rate between 2019 and 2022, even as payment volume soared to $1.36 trillion. This case shows how ROI can grow over time when AI projects are effectively scaled.

Understanding the distinction between operational and strategic benefits is key for long-term tracking. Operational gains focus on efficiency improvements, while strategic benefits enhance differentiation, resilience, and scalability. Companies that scale AI successfully often see a 3 percentage point boost in EBIT, which translates to nearly a 30% increase compared to companies that don’t scale. To capture the full value of your AI decisions, monitor both operational and strategic impacts.

Lastly, make sure to set clear goals for how reclaimed hours will be used. Efficiency gains only matter if those saved hours are redirected toward high-value activities. Without this focus, time savings won’t necessarily lead to a stronger bottom line.

Stop Buying AI Slop: How Mid-Market Companies Actually Get ROI from AI w/ Arman Hezarkhani of Tenex

Conclusion

The gap in AI ROI isn't about the technology itself - it’s about how it’s put into action. For mid-market companies, the luxury of endless experimentation without a clear payoff simply doesn’t exist. That’s where the Stop/Start/Scale framework steps in, shifting the focus from perpetual pilot programs to fully scaled, production-ready workflows that deliver tangible financial results.

Firms stuck in the early stages of AI maturity often experience lower profit margins. In contrast, those that stop wasting resources on low-value projects, start implementing high-impact workflows, and scale what works see a real competitive advantage. By Stage 3, these companies enjoy profit margins that are 0.8 percentage points above average. By Stage 4, this advantage skyrockets to 9.9 percentage points above the norm.

For mid-market businesses, the framework is particularly effective because it aligns with their realities: tighter budgets, smaller teams, and less room for trial-and-error. The earlier case studies prove this point - targeted AI workflows have slashed process times and boosted revenue. These outcomes weren’t about buying more AI tools but about rethinking workflows, assigning clear accountability, and focusing on the right KPIs.

The key to success lies in setting clear metrics and ensuring that the time saved is reinvested into high-value tasks. It’s worth noting that for every $1 spent developing an AI model, companies should plan to spend $3 on managing change. Without this groundwork, even the most advanced AI solutions won’t deliver meaningful results.

FAQs

How can mid-market companies address the lack of AI expertise to achieve better results?

Mid-market companies facing challenges with limited AI expertise can achieve better results by narrowing their focus to a few key projects rather than spreading their resources thin across multiple pilots. Zeroing in on a specific strategic area enables teams to map out workflows, assign clear roles, and refine processes effectively. This approach not only ensures scalability but also makes AI solutions more impactful.

Establishing a solid governance framework is another critical step. This means setting clear data-quality standards, defining decision-making roles, and forming a central AI steering committee. These measures help avoid fragmented efforts and ensure that AI specialists are brought into the process at the right time.

Using short, targeted sprints that deliver actionable "Stop/Start/Scale" outcomes can accelerate the journey from AI concepts to production-ready solutions with measurable KPIs. By pairing AI experts with existing staff and integrating new processes into everyday operations, companies can upskill their teams while making the most of their limited AI talent. Regular reviews and adjustments to workflows and governance further drive improvements and deliver measurable returns on investment.

How can I identify AI projects that are not delivering ROI and should be stopped?

AI projects that fail to deliver measurable results often display similar warning signs. For instance, if a project shows no improvement in key metrics like revenue growth or EBIT, it might not justify further investment. Research indicates that many AI pilots, especially those using generative AI, struggle to achieve clear business outcomes.

Common issues include the absence of defined KPIs, clear ownership, or a solid governance structure, which can cause projects to stall and provide little value. Additional red flags include prolonged pilot phases with no progress, misalignment with overarching business goals, and an inability to demonstrate cost savings or operational efficiencies, even as resources continue to be poured in. When these challenges arise, it’s often wiser to discontinue the initiative and redirect resources toward more promising opportunities.

How does the Stop/Start/Scale framework help mid-market companies achieve better AI ROI?

The Stop/Start/Scale framework is a practical approach for mid-market companies to make smarter decisions about their AI investments, focusing on projects that deliver measurable outcomes. Here's how it works: businesses stop initiatives that suffer from issues like unclear ownership, unreliable data, or poor governance, avoiding common traps like fragmented systems and wasted resources. Next, they start projects that are aligned with clear goals, key performance indicators (KPIs), and accountability measures, ensuring every effort aligns with broader business objectives. Finally, they scale only those initiatives that have proven their value, allowing companies to maximize returns while keeping risks in check.

Mid-market companies hold a unique position. They’re nimble enough to deploy AI solutions quickly, yet their projects are complex enough to create meaningful changes. The Stop/Start/Scale framework taps into this sweet spot, enabling businesses to adapt swiftly, reinvest profits, and bridge the ROI gap that often plagues AI adoption. By using this framework, mid-market leaders can transform their AI budgets into real growth opportunities and long-term competitive strength.