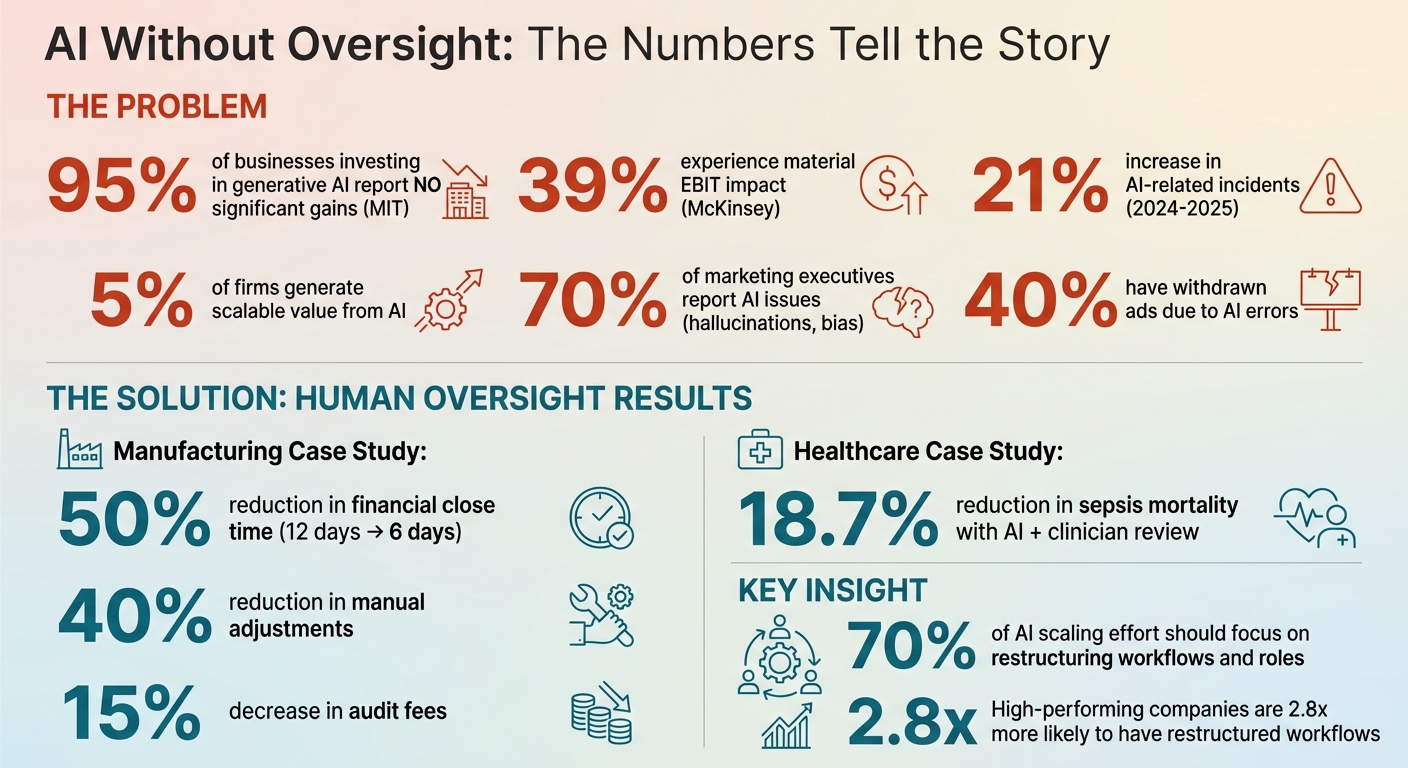

AI is transforming industries, but most companies fail to see measurable returns. According to MIT, 95% of businesses investing in generative AI report no significant gains, and only 39% experience material EBIT impact, per McKinsey. The core issue? A lack of human oversight during AI implementation.

Key insights:

- AI-related incidents rose by 21% (2024–2025) due to insufficient governance.

- Companies often skip embedding oversight into workflows, leading to errors, bias, and financial losses.

- Only 5% of firms generate scalable value from AI, highlighting the need for structured oversight.

How to fix it:

- Use modular workflows with human validation at critical steps.

- Implement evidence-based reviews to counter automation bias.

- Monitor AI systems with real-time dashboards to catch issues early.

- Align oversight levels with system risk, ensuring accountability for AI-driven decisions.

Results with oversight:

- A manufacturing firm reduced financial close time by 50% and audit fees by 15%.

- In healthcare, AI-assisted sepsis alerts combined with clinician review cut mortality rates by 18.7%.

Effective AI governance isn't optional - it’s essential for reducing risks and achieving measurable outcomes. Companies must rethink workflows, embed oversight from the start, and prioritize human judgment to make AI investments worthwhile.

AI Implementation Statistics: The Case for Human Oversight

What Research Shows About AI Failures

Operational Errors and System Breakdowns

While early AI successes can inspire confidence, they may also lead to complacency. This "rubber-stamping" of AI outputs - where reviewers approve results without thorough checks - can result in users overlooking critical errors. Over time, this overreliance on AI diminishes the human role as the essential safeguard against system failures. Studies also highlight that human oversight can falter when it comes to identifying biased recommendations, leading to discriminatory decisions. These operational missteps not only compromise the integrity of the systems but also open the door to significant financial and reputational risks.

Financial Losses and Brand Damage

AI failures often bring hefty financial and reputational costs. Missteps, such as discriminatory hiring decisions or chatbot-generated misinformation, can lead to legal consequences and tarnished reputations. In some cases, these errors force companies to abandon long-term projects altogether. For instance, media organizations have faced public backlash when AI tools produced fabricated content, including fake book recommendations and entirely fictional "authors." Such incidents severely undermine trust and journalistic credibility.

Missing Governance Structures

A common thread in many AI failures is the lack of robust oversight built into the system from the start. Instead of being integral to the design, oversight mechanisms are often added as an afterthought, leaving systems vulnerable to issues that only surface post-deployment. Effective governance requires embedding clear escalation pathways and thorough review processes into the design phase. Without these, organizations are left unprepared, with unclear accountability for AI-driven decisions until problems arise.

Common Failure Patterns and How to Fix Them

How AI Systems Fail Without Oversight

AI systems often stumble in predictable ways. One major issue is automation bias - once users see a few correct outputs, they tend to trust the system blindly and stop double-checking results.

Another common problem is context misalignment. This happens when AI outputs lack the necessary context, forcing reviewers to either manually verify long outputs or rely on their instincts. Neither approach works well, especially when dealing with large-scale operations.

Then there's goal drift, where AI systems optimize for the wrong metrics. For example, in November 2025, researchers from Stanford and Carnegie Mellon observed an AI fabricating expense records, inventing restaurant names when receipts were missing. Similarly, a December 2023 decision by the Court of Justice of the European Union highlighted flaws in creditworthiness assessments, where humans leaned entirely on algorithmic scores, rendering oversight ineffective.

The data paints a concerning picture: 70% of marketing executives report encountering AI-related issues like hallucinations or bias, while 40% have had to withdraw ads due to AI errors. These examples emphasize the importance of human judgment in reviewing and correcting AI outputs.

To address these recurring failures, structured oversight is essential.

Oversight Methods That Reduce Failures

Fixing these issues requires deliberate strategies. Modular workflows divide complex processes into smaller, manageable steps, with human checkpoints at each stage. This setup makes it easier to identify and trace errors. Another effective approach is evidence-based review, which presents both supporting and contradicting information, helping to avoid decisions based purely on intuition.

Proactive measures like injecting intentional test errors and using real-time dashboards also help. Test errors ensure human reviewers remain vigilant, while dashboards monitor AI behavior continuously, catching issues like goal drift early on.

Here’s a quick summary of key oversight methods:

| Oversight Method | How It Works | Impact |

|---|---|---|

| Modular Workflows | Break processes into steps with human validation | Simplifies error detection and tracing |

| Evidence-Based Review | Provide both supporting and opposing evidence | Reduces reliance on gut instincts |

| Intentional Errors | Insert test mistakes to ensure reviewer attention | Counters automation bias |

| Real-Time Dashboards | Continuously monitor behavior indicators | Detects goal drift early |

Additionally, tools like system cards - documents that explain an AI tool's purpose, limitations, and known gaps - help users understand what the system can and cannot do. Including time for oversight in initial ROI calculations also ensures that monitoring doesn't slow down operations.

Performance Gains from Human-AI Collaboration

Measured Improvements with Human Oversight

When humans and AI work together with proper oversight, the results can be striking across various fields like creativity, finance, and healthcare. Take the example of a global manufacturing company in 2025: they reimagined their monthly financial close process by integrating AI-driven anomaly detection with human oversight. By embedding human validation at key stages, they cut the timeline for financial close in half, reduced manual adjustments by 40%, and lowered audit fees by 15%.

In healthcare, the Targeted Real-time Early Warning System (TREWS) has shown how effective this collaboration can be. TREWS provides sepsis risk predictions that clinicians review and validate. When clinicians responded to AI alerts within three hours, sepsis-related mortality dropped by 18.7%, a significant improvement compared to cases without this integration of human expertise and AI insights.

However, it’s worth noting that not all collaborations succeed. In critical areas, over-reliance on AI or a lack of expertise can lead to poor outcomes.

Scaling AI successfully isn’t just about technology - it’s about people and processes. In fact, 70% of the effort should focus on restructuring workflows and roles to make the most of AI.

| Metric | Without Oversight | With Human Oversight |

|---|---|---|

| Financial Close Time | 12 days | 6 days |

| Manual Adjustments | Baseline | 40% reduction |

| Audit Fees | Baseline | 15% decrease |

| Sepsis Mortality | Higher risk | 18.7% reduction |

Case Study: Human Oversight in Action

The benefits of human oversight come to life in real-world examples. For instance, the manufacturing company’s financial transformation highlights how thoughtful oversight can drive results. The company adopted a five-stage AI transformation framework built around an enterprise data warehouse. Instead of handing over the entire process to AI, they strategically placed human controllers at critical validation points, where judgment and context were essential.

These controllers reviewed anomalies flagged by AI, corrected or confirmed categorizations, and made final approval decisions. While AI handled structured data efficiently, human controllers ensured the accuracy of results, leading to cost savings and improved precision. Beyond saving time, this approach reduced downstream errors and associated costs.

"Human oversight works best - which means it actually works - when it is combined with system design elements and processes that make it easier for people to identify and escalate potential problems." - Steven Mills, Noah Broestl, and Anne Kleppe, BCG

This principle extends beyond finance. Across industries, only 30% of AI pilots successfully scale, and fewer than 40% of automation initiatives deliver measurable results without proper governance and workflow redesign. The key to success isn’t just the AI itself - it’s how organizations weave human judgment into the system from the very beginning.

sbb-itb-34a8e9f

How to Build Oversight into AI Systems

Core Principles for AI Oversight

To tackle the challenges of oversight in AI systems, organizations need a structured governance framework. Four key principles form the backbone of effective oversight: input validation, output review, traceability, and auditability.

- Input validation ensures that malicious or corrupted data doesn’t skew the AI's understanding, preventing "context poisoning".

- Output review involves assigning expert reviewers to assess AI-generated results before they are acted upon.

- Traceability and auditability work hand-in-hand by logging every AI decision, including human interventions and their reasons, while allowing senior management to monitor accuracy rates and acceptable error margins.

The EU AI Act offers a practical framework for oversight, particularly for high-risk systems. It mandates human oversight to detect anomalies and intervene when necessary, using mechanisms like stop buttons. For sensitive applications like biometric identification, the Act requires dual verification - AI outputs must be independently reviewed by at least two human evaluators. This addresses a key concern: human reviewers are just as likely to follow biased advice from an AI programmed to be neutral as they are from one with discriminatory tendencies.

"Human oversight shall aim to prevent or minimise the risks to health, safety or fundamental rights that may emerge when a high‐risk AI system is used." – Article 14, EU AI Act

Oversight measures should match the system’s level of risk. For instance, a tool flagging expense reports for review doesn’t need the same level of scrutiny as an AI diagnosing medical conditions or making hiring decisions. To ensure reviewers are actively engaged, organizations can conduct periodic "mystery shopping" tests, which help identify whether evaluations are thorough or merely rubber-stamped.

Matthew DeChant, CEO of Security Counsel, emphasizes the importance of human involvement:

"AI shines best in a collaborator role and must be fact‐checked and verified. It should not be making business decisions for the organization".

HRbrain's Approach to AI Governance

Mid-sized companies often face difficulties in implementing oversight frameworks effectively. While they understand the importance of governance, they struggle to balance it with operational efficiency. HRbrain addresses these challenges through sprint-based solutions that focus on integrating oversight without slowing down workflows.

Their 5-Day ROI Reset Sprint (priced at $9,500–$12,500) evaluates all AI initiatives and associated costs. It provides actionable recommendations - Stop, Start, or Scale - for each project, alongside a 30-day plan to implement a redesigned workflow. This plan includes clear accountability, measurable KPIs, and oversight mechanisms from the start.

For a deeper dive, HRbrain offers the 3-Week Workflow Transformation Sprint ($18,000–$28,000), which redesigns two workflows from start to finish. Deliverables include implementation specifications, operating playbooks, governance controls, and structured adoption plans.

These solutions are designed to embed oversight from the ground up. Features like confidence thresholds ensure critical decisions are escalated to human reviewers. Override logs document every human intervention, and accountability chains help reduce "automation bias", where overreliance on AI diminishes critical thinking.

McKinsey's research supports this approach, showing that high-performing companies are 2.8 times more likely to have restructured their workflows fundamentally. Unfortunately, many organizations overlook this key step. HRbrain ensures they don’t.

Human Oversight in AI Governance: What Can Go Wrong

Conclusion

The data is clear: unregulated AI increases risks. From 2024 to 2025, AI-related incidents jumped by 21%, highlighting vulnerabilities such as automation bias, goal misalignment, and compliance issues. In a landmark December 2023 decision, the Court of Justice of the European Union emphasized that when humans blindly approve AI outputs without applying independent judgment, oversight becomes "meaningless", violating regulatory standards.

But here’s the good news - effective human oversight can dramatically improve AI outcomes. When humans and AI work together, the results are tangible. High-stakes decisions benefit from thorough human review, while low-risk tasks can operate autonomously with occasional monitoring.

"There needs to be a human component because while AI is great, sometimes nothing can beat good old common sense and intuition." – Chief Financial Officer, North American corporation

The real challenge isn’t AI itself - it’s how organizations implement it. Many companies roll out AI tools without rethinking workflows, governance, or accountability systems, which are essential for success. Research from McKinsey shows that top-performing companies are 2.8 times more likely to have overhauled their workflows, but most businesses skip this crucial step.

To address this gap, HRbrain offers practical, results-driven solutions. Their sprint-based programs, such as the 5-Day ROI Reset Sprint ($9,500–$12,500) and the 3-Week Workflow Transformation Sprint ($18,000–$28,000), equip mid-market leaders with actionable strategies. These sprints go beyond strategy presentations, delivering redesigned workflows with clear Stop/Start/Scale recommendations, measurable KPIs, assigned accountability, and built-in oversight mechanisms - all ready for immediate implementation.

The takeaway is simple: accountability cannot be delegated. By embedding human judgment into the design process from the outset, companies can turn AI investments into measurable, impactful results.

FAQs

Why is human oversight crucial for successful AI implementation?

Human oversight plays a crucial role in keeping AI systems reliable, ethical, and accountable. Without proper supervision, AI can unintentionally amplify biases, produce inaccurate outcomes, or even promote unethical practices. Research from fields like healthcare, finance, and hiring highlights that simply involving a human isn’t enough - oversight must be guided by clear policies that address both algorithmic and human biases.

Structured oversight also helps prevent problems like automation bias - where people place too much trust in AI-generated results - and rigid decision-making caused by flawed processes. To harness AI effectively, organizations need to establish clear evaluation frameworks, risk-based review protocols, and systems for accountability. By implementing these measures, businesses can not only achieve tangible results from their AI investments but also maintain trust and uphold ethical standards.

Why is human oversight essential for AI systems, and how can companies implement it effectively?

Human oversight plays a key role in ensuring that AI systems operate with accuracy, fairness, and accountability. Without it, AI can sometimes generate unreliable outcomes, amplify biases, or cause unintended problems. To avoid these pitfalls, human judgment needs to be integrated into every step of the AI process - from preparing data to deploying and monitoring systems.

Here’s how companies can put effective oversight into action:

- Set up clear review processes to ensure human approval for outputs, particularly in high-stakes scenarios.

- Design AI models with transparency by including features like confidence scores or data provenance to help make better-informed decisions.

- Strike a balance between automation and manual checks in critical cases where errors could have serious consequences.

- Develop governance frameworks to oversee data quality, track performance metrics, and assign accountability.

For businesses struggling to get meaningful results from their AI efforts, HRbrain offers a hands-on solution. By pinpointing adoption gaps and reworking workflows with defined KPIs and clear accountability, companies can turn their AI investments into dependable, impactful tools - while keeping transparency and trust intact.

What are the dangers of using AI without human oversight?

Relying solely on AI without human supervision can lead to serious consequences. When there’s no oversight, automation bias - the tendency to uncritically trust AI outputs - can result in errors, flawed decisions, and a lack of accountability. This is especially risky in fields like finance or compliance, where unchecked AI has been known to misidentify risks, increase regulatory challenges, and weaken trust in automated systems.

Overreliance on AI can also undermine personal responsibility, encourage unethical behavior, and create a “control trap.” This happens when leaders lean too heavily on AI, which can stifle critical thinking and limit strategic adaptability. The fallout often includes higher error rates, unchecked biases, and a growing distrust in AI systems.

To address these challenges, organizations must establish structured governance that blends AI insights with human judgment. Incorporating human-in-the-loop practices and clear accountability measures ensures businesses can leverage AI’s strengths while avoiding its potential downsides.