Boards are struggling to measure the impact of AI investments effectively. Here’s the reality: Companies are pouring $30–$40 billion into AI, but 95% report no measurable return from generative AI projects. While 88% of businesses use AI in some capacity, only 39% see any effect on earnings. The problem isn’t the technology - it’s the lack of proper metrics and governance.

Key takeaways for boards:

- AI expertise matters: Boards with strong AI knowledge achieve a 10.9% boost in return on equity, while those without lag by 3.8%.

- Metrics to track: Boards should monitor model accuracy, cost per inference, and model drift to ensure AI systems deliver consistent results over time.

- ROI challenges: AI projects often take 2–4 years to show returns, unlike faster payback from traditional tech. Boards must focus on long-term value.

- Governance is essential: Clear ownership, regular reporting, and alignment with business goals are critical to turning AI investments into success.

The solution? Boards need to adopt specific metrics, establish governance frameworks, and link AI efforts directly to financial and strategic goals. Without these steps, AI investments risk becoming costly experiments.

Core Metrics for Tracking AI Performance

Boards need consistent insights into AI performance to ensure their investments translate into meaningful results. The right metrics bridge the gap between technical performance and business goals - and these metrics must be monitored continuously, not just during launch.

Model Accuracy and Reliability

Accuracy gauges how often an AI model makes correct decisions. For classification models (like fraud detection or customer segmentation), metrics such as precision, recall, and F1-scores are essential. For regression models (such as demand forecasting), Root Mean Squared Error (RMSE) is a standard way to measure performance.

However, accuracy alone doesn’t paint the full picture. Reliability focuses on how consistently a model performs across various scenarios. Key indicators to monitor include:

- Safety Scores: Track outputs to ensure they aren’t harmful or sensitive.

- Groundedness: Verify that the model relies strictly on the information provided in its prompts.

- Error Rates: Identify and address incorrect or invalid responses.

For instance, in healthcare, targeted AI models have shown measurable success, even when handling large volumes of data. Beyond technical precision, cost efficiency is another critical factor to evaluate.

Cost Per Inference

Cost per inference measures how financially efficient an AI system is when scaled. This is calculated by dividing the total system costs - covering infrastructure, licensing, and maintenance - by the number of requests or tokens processed over a given period. Hussain Chinoy, Gen AI Technical Solutions Manager at Google Cloud, emphasizes the importance of tracking this metric:

"You can't manage what you don't measure... KPIs remain critical for evaluating success, helping to objectively assess the performance of your AI models".

Organizations that rely on AI-driven KPIs are three times more likely to adapt quickly and respond effectively than those that don’t. This metric also becomes key when choosing between managed APIs (where costs are handled by the provider) and self-hosted open models (which require managing your own infrastructure).

While cost efficiency ensures financial sustainability, keeping an eye on performance over time helps maintain long-term value.

Model Drift and Performance Over Time

AI models can lose effectiveness when the input data they encounter begins to differ from the data they were trained on - a challenge known as model drift. Boards should monitor the percentage of models under active observation to catch signs of drift early. For generative AI, tools like automated evaluators and red teaming can help detect drift quickly and maintain performance standards.

In addition, tracking override rates and conducting backup drills ensures systems remain stable and can be corrected promptly when issues arise. Companies with structured AI governance processes report a 30% higher success rate in AI project deployments, largely due to their ability to identify and address drift before it impacts business outcomes.

Measuring ROI and Financial Impact of AI

Traditional vs AI-Specific Metrics for Board Reporting

Boards need straightforward ways to evaluate whether AI investments are delivering real financial results. The challenge isn’t just about tracking expenses - it’s about linking AI efforts to tangible business outcomes. With 95% of AI investments currently failing to show measurable returns, the gap between implementation and value creation has become a pressing issue for governance.

How to Calculate ROI for AI Projects

To measure ROI for AI projects, start by setting clear business goals, such as reducing costs, increasing revenue, or mitigating risks. Then, choose key performance indicators (KPIs) that align with those goals and establish an 8- to 12-week baseline to track progress.

Value can be calculated using these approaches:

- Efficiency gains: Multiply hours saved by the labor cost per hour.

- Revenue growth: Combine conversion rate improvements, traffic volume, and average order value.

- Risk reduction: Multiply the decrease in incidents by the average cost per incident.

CFOs should focus on Net Present Value (NPV) as the primary measure of AI’s financial impact, factoring in the cost of capital. Other helpful metrics include Internal Rate of Return (IRR) and Payback Period.

Some real-world examples highlight AI’s potential:

- In September 2025, Microsoft’s AI cut manual planning by 50% and improved on-time planning by 75%.

- SA Power Networks saved $1 million in one year with AI that flagged corroding utility poles with 99% accuracy.

- Nestlé’s AI tools eliminated manual expense management and tripled reporting efficiency.

However, it’s important to note that AI projects often take longer to deliver returns compared to traditional technology investments. While standard tech projects typically achieve payback in 7–12 months, AI initiatives usually require 2–4 years to yield satisfactory results. Only 6% of organizations report achieving AI payback in under a year. Boards must adjust their expectations and focus on long-term value, using metrics like NPV rather than seeking immediate gains.

These calculations help explain why many AI projects currently struggle to deliver meaningful returns.

Why Companies Fail to See Returns

The lack of measurable returns isn’t due to AI’s capabilities but rather to shortcomings in measurement and execution. David Gallacher, Industry Fellow at UC Berkeley, puts it this way:

"We're not experiencing an AI failure; we're experiencing a measurement failure. It's time to evolve our metrics to match the transformation".

One common mistake is falling into the "efficiency trap." For example, saving 10 hours per week is meaningless unless those hours are redirected to higher-value work, such as increasing sales proposals or resolving more customer issues. Without a plan to redeploy saved time, efficiency gains don’t translate into financial impact.

Another issue is treating AI as a side project rather than a core business strategy. AI initiatives are often isolated technical experiments rather than integrated into the broader business vision. As the Boston Consulting Group advises:

"Impact before technology, targets before tools, discipline before hype".

Successful companies approach AI by designing their transformations around an ideal process rather than trying to layer AI onto outdated workflows.

Data and infrastructure challenges also hinder results. In finance, for example, the median ROI for AI projects is only 10%, far below the 20% target many companies aim for. Fragmented systems, poor data quality, and siloed platforms often lead to investments in AI applications before addressing these foundational problems. Additionally, a lack of training prevents employees from effectively using AI tools, leading to low adoption rates or misuse.

HRbrain addresses these systemic issues by running focused sprints to identify areas where AI adoption is high but transformation is low. By redesigning workflows, governance, and accountability systems, HRbrain helps mid-market companies tackle the underlying causes of poor ROI.

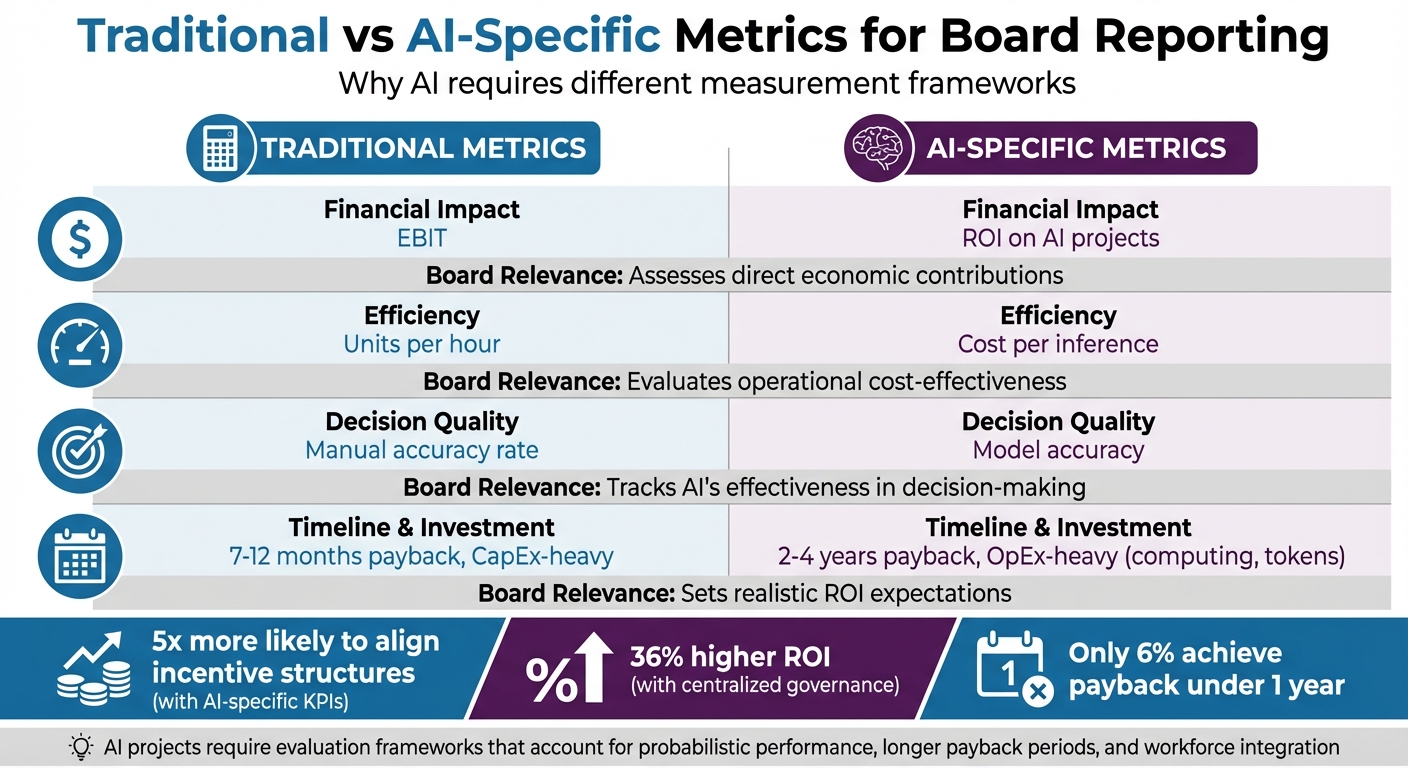

Traditional vs. AI-Specific Metrics

To overcome these challenges, it’s essential to use metrics tailored to AI’s unique characteristics. Unlike traditional technology investments, AI projects require evaluation frameworks that account for probabilistic performance, longer payback periods, and workforce integration.

Traditional metrics often focus on uptime or pass/fail criteria, while AI systems need to be measured on factors like model accuracy, groundedness, and hallucination rates. Similarly, traditional projects are typically capital expenditure-heavy with quick payback expectations (7–12 months). In contrast, AI projects are operational expenditure-heavy, driven by costs like computing and tokens, and usually require 2–4 years to achieve returns.

AI metrics should also capture aspects such as decision speed, model latency, and token throughput, which are unique to AI-driven processes.

| Metric Type | Traditional Example | AI Example | Board Relevance |

|---|---|---|---|

| Financial Impact | EBIT | ROI on AI projects | Assesses direct economic contributions |

| Efficiency | Units per hour | Cost per inference | Evaluates operational cost-effectiveness |

| Decision Quality | Manual accuracy rate | Model accuracy | Tracks AI’s effectiveness in decision-making |

Companies that integrate AI-specific KPIs into their operations are five times more likely to align incentive structures with business goals. The most successful organizations - those in the top 20% of AI performers - use distinct frameworks for different types of AI. For instance, generative AI is evaluated based on short-term productivity gains, with 38% expecting ROI within a year. On the other hand, agentic AI is assessed over 3–5 years, focusing on process redesign and risk management.

Boards must avoid applying outdated, short-term metrics to AI initiatives. As AI evolves, so should the way we measure success, shifting governance toward metrics that reflect its transformative potential.

Setting Up Governance for AI Accountability

When no one takes clear ownership of AI initiatives, they tend to linger in endless pilot stages, failing to deliver tangible business results. The solution? Establishing clear leadership and building structures focused on outcomes. This approach lays the groundwork for well-defined roles and continuous oversight.

Assigning Roles and Ownership

For AI to succeed, leadership must identify specific individuals responsible for its metrics and outcomes. The Boston Consulting Group emphasizes:

"The AI impact agenda [must be] owned by the CEO and executive business leaders - the ultimate P&L owners - not delegated to a technology or IT function".

A Chief AI Officer (CAIO) who reports directly to the CEO or board can significantly boost results. Research shows organizations with this structure see a 10% higher ROI on AI investments, with 57% of CAIOs already reporting at this level to ensure they have the authority needed to drive change. Centralized or hub-and-spoke governance models can further increase ROI by 36%.

Board oversight also requires clarity. As of 2024, only 39% of Fortune 100 companies disclosed any form of board-level AI oversight. Boards must define which issues require full-board attention - such as major investments or algorithmic audits - and which can be handled by committees.

The benefits of getting this right are clear: Companies with AI-savvy boards outperform their peers by 10.9 percentage points in return on equity. Assigning responsibility not only prevents stalled initiatives but also sets the stage for continuous evaluation, which is critical for long-term success.

Ongoing Evaluation and Reporting

AI systems evolve constantly, and without regular monitoring, models can drift, performance can decline, and costly failures can occur. Continuous evaluation ensures these risks are managed while maintaining the value AI brings.

Boards must rely on real-time dashboards to monitor key indicators like productivity gains and cost savings. Automated systems that detect issues such as bias, model drift, and performance anomalies are essential, eliminating the need to wait for quarterly reviews.

The frequency of board discussions on AI also matters. Alarmingly, only 14% of board members report discussing AI at every meeting, while 45% say it’s not on their agenda at all. This is a problem, especially when 80% of business leaders cite challenges like AI explainability, ethics, and trust as major hurdles to adoption.

To address this, boards should establish clear triggers for escalation, such as detecting biased outputs, security breaches, or significant model drift. Quarterly AI Compliance Dashboards can help track high-risk applications, monitor model inventories, assess control measures, and document incidents, aligning with frameworks like the EU AI Act.

Direct interaction is also vital. Board members should engage directly with Chief Data and Analytics Officers (CDAOs) and business leaders to gain an unfiltered understanding of progress, rather than relying solely on reports from the CEO or CFO.

Connecting AI Governance to Business Strategy

AI governance often fails when treated as a standalone function. The most successful companies integrate AI oversight into their existing workflows and strategic planning, avoiding the creation of isolated structures.

Audit committees, for example, can expand their scope to include algorithmic audits, ensuring AI systems stay aligned with business objectives and regulatory requirements. Strategic alignment begins with defining the company’s AI approach. McKinsey outlines four archetypes for AI adoption: Business Pioneers (creating new offerings), Internal Transformers (revamping operations), Functional Reinventors (targeting specific workflows), and Pragmatic Adopters (taking a fast-follower approach). Each archetype requires tailored oversight. For instance, Business Pioneers should focus on leadership and strategic investments, while Pragmatic Adopters need to prioritize market intelligence and assess the risks of inaction.

A "zero-based mindset", as described by BCG, is key - redesigning AI initiatives from scratch with a clear vision of ideal processes, rather than retrofitting AI into existing workflows. This approach ensures that AI governance becomes a driver of value creation rather than just an add-on.

Finally, management incentives should align with measurable AI outcomes, such as productivity improvements, cost savings, and revenue growth, rather than simply the successful launch of pilot programs. This alignment ensures AI projects are held to the same performance standards as other critical business investments.

sbb-itb-34a8e9f

How to Report AI Performance to Boards

Once governance structures and KPIs are in place, the next step is crafting reports that help boards make informed decisions about AI initiatives. The key is to focus on three pillars: a compelling narrative, risk transparency, and progress tracking.

Using Narrative-Driven Reports

Dashboards alone can't capture the broader picture of AI's strategic influence. Reports should go beyond numbers to explain how AI is shaping competitive dynamics - whether by disrupting current product lines, creating new market opportunities, or streamlining operations. Boston Consulting Group sums it up well:

"Impact before technology, targets before tools, discipline before hype".

This approach means prioritizing business outcomes over technical milestones. Show how early successes are evolving into tangible enterprise value. Use transparent dashboards to make AI progress as clear and measurable as financial performance.

Once the story ties back to strategic goals, it's equally important to address the risks involved through compliance metrics.

Including Risk and Compliance Metrics

Boards need a balanced view that highlights both opportunities and challenges. A Quarterly AI Compliance Dashboard can help by tracking high-risk cases, maintaining model inventories, and monitoring control measures. Christine Davine, Managing Partner at Deloitte's Center for Board Effectiveness, highlights the importance of this:

"Effective AI governance is crucial for supporting the board's oversight of AI. It can enable ethical use, enhance data quality, and boost productivity".

These reports should also address unauthorized or "shadow" AI use within the organization, which can pose risks like data breaches. Establish escalation triggers - specific thresholds or incidents that demand immediate attention from the board. Notably, companies with formal AI governance frameworks report 30% higher success rates in project implementation.

Showing Progress Through Staged Metrics

AI development is a journey, and reports should reflect how progress unfolds over time. A three-tiered framework can effectively showcase this evolution:

- Model Quality: Technical accuracy and performance.

- System Quality: Operational scalability and reliability.

- Business Impact: Strategic contributions to the organization.

Conclusion

The disconnect between adopting AI and achieving measurable results often boils down to how organizations measure success and govern their AI initiatives. Bridging this gap requires focusing on the right metrics, accurately assessing financial impacts, setting up clear governance frameworks, and improving reporting practices. It’s not just about using AI - it’s about making it work strategically.

Companies that treat AI as a core part of their strategy, with executive incentives tied to tangible outcomes, consistently outperform their competitors. What sets them apart? They prioritize profit-and-loss (P&L) impact over the number of pilot projects, use AI-driven KPIs to uncover key performance drivers, and align leadership incentives with realized business value rather than mere adoption milestones.

As McKinsey's Aamer Baig aptly puts it:

"AI is closer to a reckoning than a trend. And that is why AI is a board-level priority".

The most successful organizations take a methodical approach: they start with a vision of flawless operations, create transparent dashboards to monitor outcomes, and ensure board members have enough understanding to challenge management’s decisions. Research shows that companies leveraging AI-driven KPIs are five times more likely to align their incentive structures effectively with their objectives.

Studies and frameworks consistently emphasize that precise metrics and strong governance are essential for turning AI investments into measurable successes. As discussed earlier, codifying governance practices and establishing escalation triggers are critical steps. Companies that treat AI oversight as an ongoing strategic priority - and execute with discipline - stand out from the crowd. However, fewer than 25% of organizations currently have board-approved AI policies, signaling both a significant risk and a major opportunity.

The real challenge isn’t adopting AI - it’s building the accountability systems that transform adoption into measurable business results.

FAQs

What metrics should boards focus on to assess the success of AI investments?

Boards should prioritize metrics that tie AI investments directly to tangible business results. These include EBIT impact, revenue growth, cost savings, and productivity improvements. On top of that, AI-focused metrics like model accuracy, user adoption rates, and time-to-value offer a closer look at how well AI initiatives are performing.

When these metrics are aligned with the company’s strategic objectives, boards gain a clearer picture of whether AI efforts are delivering meaningful value and can identify areas that might need fine-tuning to boost ROI.

How can boards ensure AI projects support long-term business objectives?

To ensure AI projects contribute meaningfully to long-term goals, boards should view them as core business drivers, not just experimental side projects. Begin by setting clear, outcome-oriented goals that tie directly to measurable results, such as boosting revenue, cutting costs, or improving productivity. From there, work backward to identify the data, talent, and technology needed to achieve these objectives. These goals should double as key performance indicators (KPIs) for each AI initiative, with quarterly progress updates to highlight early wins or address challenges before they grow.

Boards must also implement a structured governance framework to oversee AI efforts. This could mean folding AI oversight into existing committees or creating a dedicated sub-committee. Regular dashboards should monitor both risks - like bias, security, and compliance - and value metrics, such as ROI or EBIT contributions. Assigning clear ownership for each project is crucial, and having at least one AI-savvy board member or an external advisor can help ensure decisions are both technically sound and strategically aligned.

Finally, boards should conduct focused reviews of their AI portfolio. This means cutting projects with limited impact, scaling those with high potential, and embedding workflows with defined KPIs and accountability. By emphasizing results, consistent reporting, and robust governance, boards can position AI investments to drive real business transformation.

Why do so many AI projects fail to deliver measurable results despite significant investments?

Many AI projects stumble because businesses tend to chase the buzz rather than focusing on a solid strategy. They often expect rapid results without establishing the necessary foundation for success. Common pitfalls include weak governance, unclear KPIs, and a disconnect between AI initiatives and specific business goals. This often leaves AI efforts stuck in pilot phases or unable to achieve measurable outcomes.

For AI to deliver real value, companies must prioritize building workflows, accountability frameworks, and metrics that tie directly to business objectives. Without these essential components, even significant investments risk failing to produce meaningful results.