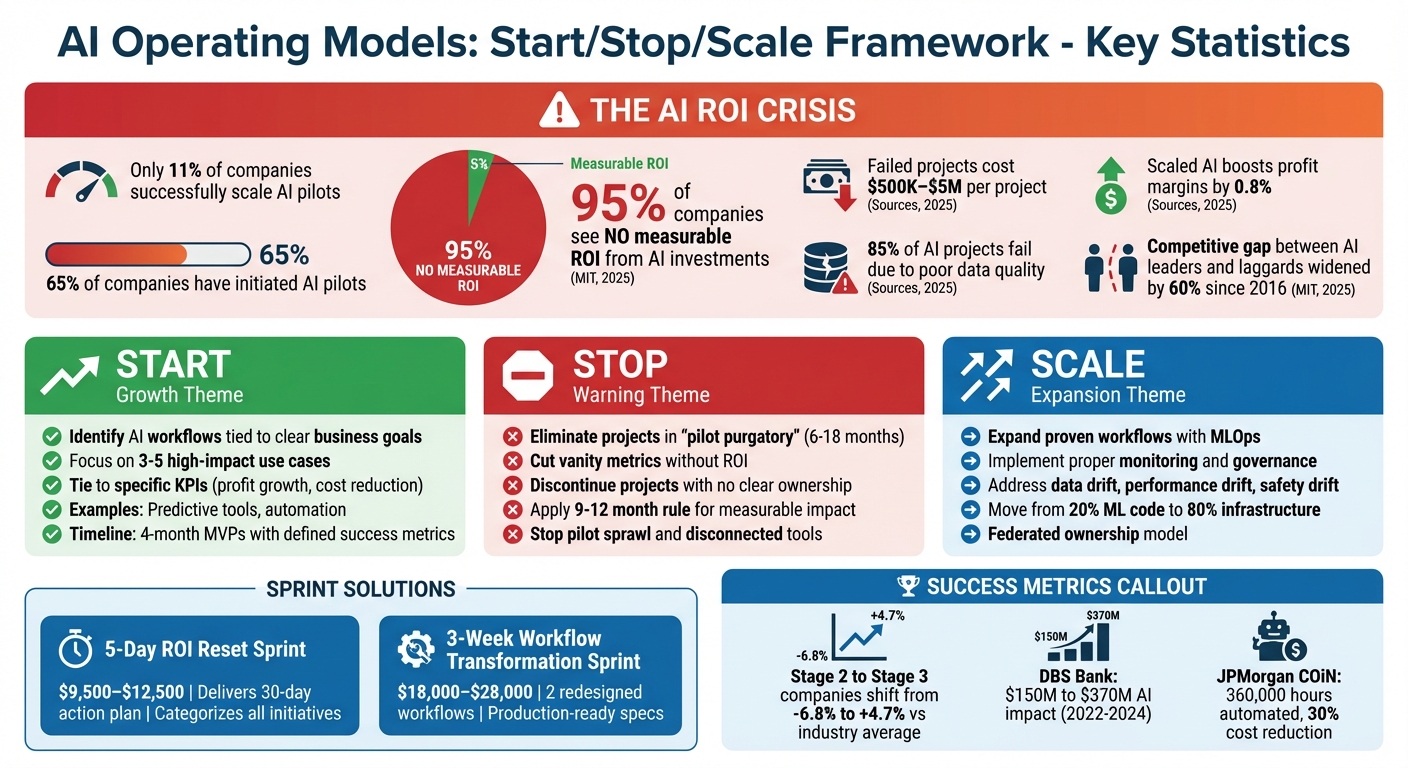

Struggling to get results from AI? Many companies invest millions in AI but fail to see returns. The solution? The Start/Stop/Scale framework. This approach helps businesses focus on measurable outcomes, cut wasteful projects, and scale successful AI initiatives.

Here’s the breakdown:

- Start: Identify AI workflows tied to clear business goals (e.g., profit growth, cost reduction). Focus on high-impact use cases like predictive tools or automation.

- Stop: Eliminate projects stuck in "pilot purgatory" or those relying on vanity metrics (accuracy without ROI). Stop wasting resources on stalled or misaligned initiatives.

- Scale: Expand proven workflows with proper monitoring (MLOps), governance, and risk management. Address challenges like data drift and ensure consistent results.

Key Stats:

- 95% of companies see no measurable ROI from AI investments (MIT, 2025).

- Only 11% of companies successfully scale AI pilots.

- Failed projects cost $500K–$5M, while scaled AI boosts profit margins by 0.8%.

Want results in 90 days? The 5-Day ROI Reset Sprint and 3-Week Workflow Transformation Sprint help businesses cut inefficiencies, launch impactful projects, and scale effectively. These sprints deliver actionable plans and workflows with measurable KPIs, ensuring AI drives real financial outcomes.

AI Investment ROI Crisis: Key Statistics and Start/Stop/Scale Framework Overview

How to Identify High-ROI Use Cases for AI in Your Business

Stop: Eliminating Low-Impact AI Initiatives

To build an effective AI operating model, the first step is identifying and shutting down projects that consume resources without delivering real value. This isn't about stifling innovation - it's about channeling efforts into initiatives that truly make a difference.

While 65% of companies have initiated AI pilots, only 11% have managed to scale them successfully across their organizations. Many businesses find themselves stuck in "pilot purgatory", where projects linger for 6–18 months without ever reaching full implementation. During this period, costs escalate, executives lose confidence, and team morale takes a hit.

When to Stop AI Initiatives

Certain warning signs make it clear when an AI project needs to be discontinued. One of the biggest red flags is vanity metrics. If success is measured by things like "number of queries processed" or "model accuracy" without any connection to business outcomes like profit growth or customer retention, the project is likely off track.

Another issue is pilot sprawl, where multiple teams work independently on similar problems, creating a mess of disconnected tools. This approach doesn't generate enterprise-wide value - it creates confusion. AI initiatives handled exclusively by IT teams, without involvement from the business side, are 50% less likely to succeed compared to those with cross-functional collaboration.

Model drift is another common pitfall. AI models aren't "set-it-and-forget-it" solutions. Without proper maintenance and ownership, their performance declines rapidly.

A practical benchmark to keep in mind is the 9–12 month rule. If a project hasn’t delivered measurable business impact within this timeframe, it’s time to reassess. Projects dragging on beyond 12 months often suffer from unclear goals, poor data quality, or misalignment with business processes.

Examples of AI Projects to Discontinue

Here are some examples of low-impact AI initiatives, the risks they pose, and better alternatives to consider:

| Low-Impact Project | Associated Risks | Alternative Approach |

|---|---|---|

| Unintegrated Chatbots | Data silos, fragmented user experience, accuracy capped at 92% without integration | Integrated workflows that enable seamless customer interactions with over 97% accuracy |

| "Tech-for-Tech" Pilots | High costs with no measurable business impact, lower success rates in IT silos | Projects tied to growth, cost savings, or quality improvements, with clear business ownership |

| No Clear Ownership | Model performance declines, biased results, compliance risks | Assign business sponsors to oversee the entire lifecycle, including post-launch monitoring |

| Stalled Pilots (>12 months) | Wasted resources, team burnout, missed opportunities that widen competitive gaps | Set clear exit criteria and aim for 4-month MVPs with defined success metrics |

| Ad Hoc Tools | Reinventing the wheel, inconsistent quality, slower delivery times | Use reusable templates and standardized architectures for faster, more reliable deployment |

By recognizing and halting these types of projects, organizations can free up resources and shift focus to initiatives that deliver real results.

The financial cost of continuing underperforming projects is staggering. A single failed AI pilot typically costs between $500,000 and $2 million, with more complex implementations exceeding $5 million. However, the opportunity cost is even greater. Since 2016, the competitive gap between AI leaders and laggards has widened by 60%. As Ayelet Israeli and Eva Ascarza wrote in Harvard Business Review:

"AI initiatives fail less because models are weak and more because organizations are unprepared".

Shutting down low-impact projects isn’t about admitting defeat - it’s a strategic move. It allows teams to redirect their energy and resources toward high-impact initiatives that can truly drive business success. With these distractions out of the way, the path is clear to focus on projects that yield measurable, meaningful outcomes.

Start: Launching High-Impact AI Workflows

Once you've cleared out low-impact projects, it's time to focus on AI workflows that truly deliver results. The goal? Turning AI investments into measurable financial returns.

How to Start AI Initiatives

Begin by identifying costly bottlenecks instead of exploring AI's endless possibilities. This ensures every AI use case is tied to a clear, quantifiable business goal before development even begins.

Zero in on 3–5 initiatives that directly impact your profit and loss (P&L). These typically fall into six common workflow types: Q&A-based search, summarization, content generation, content transformation, virtual agents, and code generation. Trying to launch too many pilots at once spreads resources thin and weakens results.

Before diving in, assess your data's quality and readiness. AI amplifies flaws in your processes, and if your data is messy, incomplete, or siloed, those issues will stand out even more. It's no surprise that 85% of AI projects fail due to poor data quality or a lack of relevant data. Additionally, 58% of AI leaders cite disconnected systems as a major hurdle to successful deployment.

"AI doesn't replace weak processes: it exposes and amplifies them." - Vittesh Sahni, Sr. Director of AI, Coherent Solutions

Establish tiered governance to manage risk effectively. For instance, apply strict oversight to sensitive, customer-facing tools while using lighter controls for internal applications.

Once your problem is clearly defined and your data is ready, the next step is to select use cases that promise measurable results.

Selecting Use Cases with Measurable Benefits

Every AI initiative should be tied to 1–3 specific KPIs that directly impact business outcomes. These could include reducing cycle times, increasing revenue per employee, cutting operational costs, or boosting customer retention. Define these metrics upfront and track progress using a business dashboard - not just a technical monitoring tool.

Take Italgas Group, a European gas distributor, as an example. In 2024, they rolled out "WorkOnSite", a predictive AI tool for managing remote construction sites. Their goals were clear: speed up project completion and reduce physical inspections. The results? A 40% faster project completion rate and an 80% drop in inspections. On top of that, their "Bludigit" unit commercialized the software, earning $3 million in revenue with margins exceeding 50%.

DBS Bank, led by CEO Piyush Gupta, took a different approach. They set a bold goal of running 1,000 AI experiments annually. But they didn’t stop at experimentation - they meticulously tracked economic outcomes. Between 2022 and 2024, the bank doubled AI's financial impact, growing it from $150 million to $370 million. By early 2024, they had implemented over 350 AI use cases and projected an economic impact surpassing $1 billion by 2025.

When selecting use cases, consider how AI will work alongside humans. Will it act as an advisor (offering recommendations while humans make the final call), a co-pilot (drafting content for human review), an agent (executing tasks autonomously within set limits), or an orchestrator (managing multiple systems while humans handle exceptions)? This decision shapes both the workflow design and success metrics.

"Define success before you start. Tie metrics to business outcomes, not just technical accuracy." - Ayelet Israeli and Eva Ascarza

Start small and focus on specific tasks. For instance, JPMorgan Chase's COiN system, introduced under CEO Jamie Dimon, automated 360,000 hours of legal work annually by targeting one task: contract review. This initiative slashed legal operation costs by 30% and reduced compliance errors significantly. Instead of overhauling their entire legal department, they concentrated on a single high-impact workflow and executed it exceptionally well.

The key difference between successful and failed AI projects often lies in workflow redesign. Simply adding AI to existing processes isn't enough. Instead, rethink the entire workflow, assigning clear roles to both humans and AI. That’s why machine learning typically accounts for just 20% of the effort in an AI project - the other 80% involves building data pipelines, integrating systems, setting up governance, and managing organizational change.

sbb-itb-34a8e9f

Scale: Growing Proven AI Workflows

Expanding a successful AI pilot into a full-scale enterprise system isn't just about adding more users. It requires a thoughtful overhaul of how AI is managed, monitored, and governed.

Scaling AI with MLOps and Monitoring

Scaling AI is more than duplicating a pilot project across departments. It demands the adoption of MLOps - treating AI models like products with proper versioning, monitoring, and governance practices.

Interestingly, the machine learning code itself accounts for just 20% of the work. The other 80% involves building robust data pipelines, integrating systems, creating audit trails, and managing changes effectively.

To scale successfully, you need to monitor three types of drift:

- Data Drift: Changes in input patterns over time.

- Performance Drift: A drop in accuracy or speed.

- Safety Drift: Instances where the system generates harmful outputs or exposes sensitive information.

By addressing these drifts, you can ensure your system is ready for production. A well-structured scaling roadmap should include checkpoints like:

- Data Readiness: Verifying data provenance and privacy.

- Offline Quality: Setting clear metrics and running safety tests.

- Operational Readiness: Establishing service level objectives and creating dashboards.

- Change Approval: Preventing broken systems from scaling prematurely.

From the start, integrate safety measures into your system. Equip your on-call teams with tools like a one-click kill switch to shut down problematic routes, tools, or models if performance or safety thresholds are breached. Additionally, implement a "Safe Mode" to gracefully degrade the system when necessary.

"Organizations that master ModelOps will fundamentally change how they deliver value through AI." - Mary Kryska, EY Americas AI and Data Responsible AI Leader

To avoid chaos as you scale, manage prompt sprawl with version-controlled template libraries - similar to how you manage code. Automate repetitive tasks like dataset materialization, index rebuilding, and smoke testing, so your team can focus on critical go/no-go decisions.

Governance should be tailored based on risk levels. For instance, a customer-facing chatbot will require stricter controls and more frequent audits compared to an internal summarization tool. This risk-based governance allows for faster progress in low-risk areas while maintaining tight oversight where it matters most.

Scaling Stages Comparison

Each phase of scaling comes with its own challenges, goals, and risks. Here's how they compare:

| Scaling Stage | Requirements | Key KPIs | Associated Risks |

|---|---|---|---|

| Pilot (PoC) | Small-scale data, manual testing, sandbox environment | Technical feasibility, model accuracy, initial user feedback | Siloed efforts, "pilot paralysis", lack of business alignment |

| Production | MLOps pipelines, integration with legacy APIs, GRC framework | Reliability, latency (p95), cost per request, data drift | Technical debt, security vulnerabilities, integration friction |

| Enterprise | Federated ownership, modular architecture, upskilled workforce | ROI/value realization, adoption rate, speed to market | Regulatory non-compliance, brand reputation, model hallucinations |

At the enterprise stage, a shift from centralized to federated ownership becomes essential. While 65% of companies have initiated AI pilots, only 11% have successfully scaled them across their organizations. High-performing companies move from a centralized Center of Excellence to a structure where individual business units manage their AI use cases while sharing a common infrastructure. This shift highlights the need for a redesigned operational model to support scalable AI.

Despite its benefits, only 11% of companies have embraced a component-based development model, a key characteristic of high performers. This modular approach enables updates to fast-evolving elements like LLM hosting or agents without overhauling stable infrastructure like cloud hosting. Think of it as replacing a single part in a machine rather than rebuilding the entire system.

Implementing the Start/Stop/Scale Framework with HRbrain

The Start/Stop/Scale framework thrives on structured execution and clear accountability. HRbrain brings this to life with two sprint-based solutions designed to deliver decisions and workflows that are ready for production.

For many mid-market companies, there’s no consistent process to identify what’s working, cut out what’s not, and expand what drives measurable results. In fact, 65% of organizations aren’t tracking the impact of generative AI adoption on their workforce, and only 15% have successfully integrated and scaled AI across their operations. HRbrain’s sprint methodology directly tackles this challenge by turning assessments into actionable outcomes that deliver immediate ROI.

5-Day ROI Reset Sprint

The 5-Day ROI Reset Sprint (priced between $9,500 and $12,500) evaluates every AI initiative and expenditure across your organization, categorizing each one into Start, Stop, or Scale decisions. This detailed review ensures you avoid the trap of “pilot purgatory” by aligning initiatives with measurable business value.

The sprint produces a 30-day action plan with clear ownership assigned to each initiative, moving from vague experimentation to personal accountability. Each decision is backed by telemetry-driven KPIs - such as AI prompts per active user or output refinement rates - instead of relying on subjective productivity claims. As part of the plan, one redesigned workflow is prepared for immediate deployment, complete with governance controls and adoption systems to ensure smooth execution.

3-Week Workflow Transformation Sprint

The 3-Week Workflow Transformation Sprint (costing between $18,000 and $28,000) focuses on redesigning two workflows from start to finish, embedding AI capabilities directly into daily operations. This sprint reimagines work processes to fully integrate AI into how tasks are performed.

Deliverables include detailed implementation specs, operational playbooks, and structured adoption systems to ensure the redesigned workflows are effectively utilized. The sprint is built on four critical pillars: Strategic Alignment, Technical Foundation, Governance, and Organizational Readiness. It defines AI’s role in each workflow - whether as an advisor suggesting actions, an executor handling routine tasks, or an augmenter improving human decision-making. For mid-market firms, measurable ROI from AI deployment typically starts showing within 6–12 months. This sprint accelerates that timeline by delivering production-ready workflows with defined ownership and KPIs from the outset.

Conclusion: Achieving AI ROI with the Start/Stop/Scale Framework

The challenge of bridging the gap between AI investments and tangible returns isn’t rooted in technology - it’s about how organizations operate. While 65% of companies initiate AI pilots, only 11% manage to scale them across the enterprise. The Start/Stop/Scale framework addresses this by cutting inefficiencies, prioritizing impactful workflows, and creating the structure needed to turn experiments into real business results.

Stop decisions help prevent wasting resources on initiatives stuck in "pilot purgatory" - those without a clear business purpose or that suffer from issues like model drift. Start decisions focus on launching projects tied to specific KPIs, such as EBITDA improvements or reduced churn, ensuring every dollar spent has a measurable goal. Scale decisions leverage reusable templates and shared architectures, cutting delivery times by 50-60% and transforming small-scale experiments into enterprise-wide success.

The financial benefits of scaling AI are hard to ignore. Companies that advance from running pilots (Stage 2) to embedding AI into their operations (Stage 3) see their performance shift dramatically - from 6.8 percentage points below the industry average to 4.7 percentage points above it. As Ayelet Israeli and Eva Ascarza explained in Harvard Business Review:

"AI initiatives fail less because models are weak and more because organizations are unprepared".

HRbrain’s 5-Day ROI Reset Sprint and 3-Week Workflow Transformation Sprint are designed to make AI investments count. These programs deliver actionable decisions, optimized workflows, and accountability systems that drive measurable results. With named owners, clear KPIs, and adoption strategies, these aren’t just plans - they’re ready-to-deploy solutions aimed at delivering results in just 30 days.

FAQs

How can companies decide which AI projects to stop, continue, or scale?

To determine whether to halt, continue, or expand an AI project, businesses should rely on a data-driven framework that measures the project’s impact and ensures it aligns with their overarching goals. The Start/Stop/Scale method provides a systematic way to evaluate performance by considering essential factors like return on investment (ROI), scalability, and how well the project fits into the company’s strategic vision.

Projects that demonstrate clear key performance indicators (KPIs), deliver strong business outcomes, and are ready for wider implementation are prime candidates for scaling. Conversely, initiatives that fail to show measurable value or encounter significant obstacles should be re-evaluated or discontinued. Using a structured approach like this helps businesses make the most of their AI investments, driving sustainable growth and delivering meaningful results.

What are the main reasons AI projects often fail?

AI projects often stumble because of vague strategies, misaligned priorities, and pushback within organizations. Companies frequently pour resources into AI without clearly identifying specific use cases or setting measurable goals tied to business outcomes. Instead of addressing real problems, these projects often become more about showcasing technology, which leads to wasted time, money, and effort without delivering meaningful results.

Resistance within organizations is another major hurdle. Employees might worry about losing their jobs or feel unprepared to work with AI due to a lack of knowledge or training. On top of that, if leadership isn’t fully on board - whether through weak executive support or unclear roles and responsibilities - AI initiatives struggle to gain traction. This combination of challenges explains why so many AI pilot programs fail to achieve measurable success, even with substantial investment behind them.

How does the Start/Stop/Scale framework help maximize AI ROI?

The Start/Stop/Scale framework is a smart way for organizations to maximize the return on their AI investments. It focuses on pinpointing which initiatives to start, stop, or scale, ensuring that resources are directed toward projects with the greatest potential to make a difference. This method cuts down on inefficiencies and avoids unnecessary spending.

By rethinking workflows, governance structures, and accountability systems, the framework helps businesses zero in on measurable outcomes. This focused strategy turns AI investments into real, actionable results, closing the gap between adopting AI and achieving meaningful business benefits.